Background

Thanks to the endless transparency of the US military – for which they are rarely praised but often vilified – we know a lot about the DARPA 2020 AI test programme.

First flight of Boeing’s ATS (Airpower Teaming System) a long-range ‘Loyal Wingman’ UCAV developed in partnership with the Royal Australian Air Force. Boeing

First flight of Boeing’s ATS (Airpower Teaming System) a long-range ‘Loyal Wingman’ UCAV developed in partnership with the Royal Australian Air Force. Boeing

An AI programme from the company Heron Systems was pitted – and this should be emphasised – in a virtual 1vs1, gunsonly engagement against an experienced F-16 weapons instructor. The AI scored five convincing shots/victories without the human-piloted equivalent being able to bring guns to bear in a meaningful manner. This was undertaken using the JSBSim, not a generally available product but which is likely the equal of Eagle Dynamic’s DCS series in terms of its flight modelling.

That Heron Systems – a small AI company – was selected over such leviathans as Lockheed Martin is a tremendous accomplishment but what was the point of the test and what was it actually trying to demonstrate?

Computer beats human in F-16: Round 1 – Observe

Significant responses followed the publicised outcome but not all were terribly helpful. There was a tendency – particularly among general commentary – to confuse ‘test’ with ‘prove’, as in: this ‘proved’ something about AI versus human pilots. It did not, nor was that the intention. The experiment was to demonstrate – to test – what an AI could accomplish at this stage. One key element of any test – hammered into teenagers during early science lessons – is to reduce the variables so that any outcomes can be understood and digested without worry over which inputs might have been of principal cause.

With this entirely justifiable approach, the test was conducted under the most basic of possible scenarios: a single pair of aircraft approached head-on (merged) at high-subsonic speeds and a series of medium altitudes, at which point they were free to manoeuvre. A victory was awarded to the first one obtaining a ‘nose-pointing’ aspect on the other within 3,000ft (roughly average effective gun-range). No missiles were included nor any radar, EO/IR systems or defensive aides. Neither participant had a wingman, worried about other threats, concerns over fuel, rules of engagement or wider operational aspects. It was the classic – or anachronistic – dogfight.

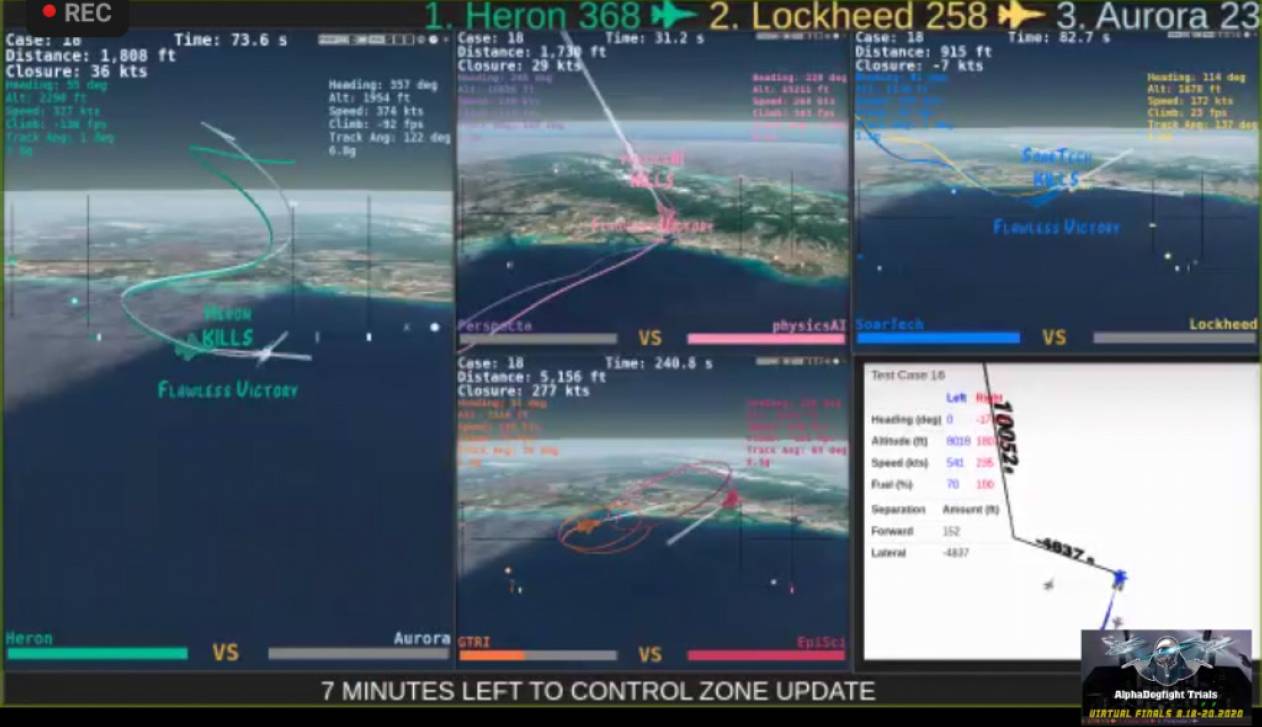

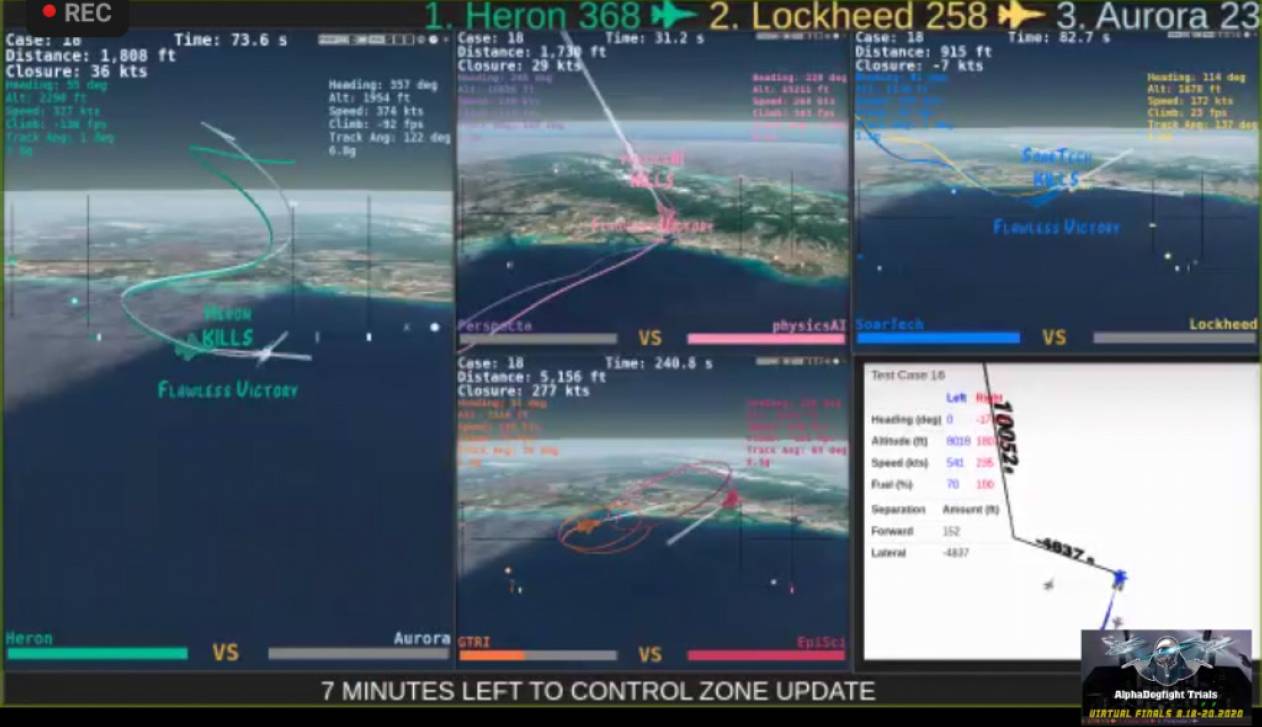

Heron Systems’ AI agent goes up against a Lockheed Martin AI agent in a AI vs AI test. DARPA

Heron Systems’ AI agent goes up against a Lockheed Martin AI agent in a AI vs AI test. DARPA

Before moving on to the wider implications, it is worth remembering that top-tier air forces still stress this kind of encounter – termed basic fighter manoeuvring (BFM) – as a key part of their syllabus.

Not only is it a lot of fun but it instils confidence in the pilot that control of their aircraft can become instinctive and that their brain – the intelligence – can focus on the far more taxing problems of the modern battlefield. It requires that the pilot surpasses a purely physical sense of an aircraft’s energy state and merely factors this in to far more important calculations concerning how to manage complex knowns, threatening known-unknowns and highly likely unknown-unknowns that comprise high-tempo operations. Skilled BFM is considered, therefore, not an end in itself but as a tool to shape an outcome within a far more confusing and dynamic environment.

Computer beats human in F-16: Round 2 – Orient

Several YouTube videos exist of the engagement with third party analysis. Though extremely interesting and illuminating, these are not the focus of this article. What the encounters do reveal, though, is the highly structured nature of the test. One critical consideration is that – being virtual and equal – the AI had perfect and precise knowledge of its opponent’s location at all times. This understanding – termed ‘situational awareness’ – would not have been possible had the same AI being flying an actual aircraft that lacked perfect spherical and high-fidelity vision, as well as instantaneous calculation on relative angles, energy states and similar information concerning its human opponent.

The winning AI takes an an experienced human F-16 pilot in DARPA ALPHA Dogfight simulated battle. DARPA

The winning AI takes an an experienced human F-16 pilot in DARPA ALPHA Dogfight simulated battle. DARPA

Not only is this a huge advantage to the AI programme (as the old saying goes: ‘lose sight, lose the fight’) but, added to the ‘guns-only’ nature of the engagement, it also precluded the human pilot from using a wider bag of weapons and systems tricks. In short, the AI was not concerned about anything more than the next 5-10 seconds of BFM. That is not only horrendously dangerous for actual aerial combat but it is almost a sure way to lose in a real scenario of multiple aircraft, threats weapons, sensors and varying engagement ranges.

This is important with regard to the manner in which the AI engaged and the conclusions as to possible impact on combat aircraft. Almost without exception, the human pilot went with trying to control the fight and manage the encounter, largely to avoid low-probability shots that cost energy. Instead, he manoeuvred to negate his opponent and find a more favourable position. Meanwhile, the AI used its split-second timing and God-like accuracy to prioritise any opportunity. This is not to say that the human pilot was unaggressive but, owing to both its (arguably unrealistic) SA and laser-like aim, the AI often achieved its snapshot.

Computer beats human in F-16: Round 3 – Decide

Referring to the opening elements of this article, the test staged was indeed only a test. What might we draw from this? The AI showed a clear strength in manoeuvring its virtual aircraft – within the artificial environment – and clearly ‘understood’ how to achieve this limited mission goal. While the above assessment has illustrated the caveats of such a specific scenario, even at this stage the same software would be very useful for a UAV or missile.

THE ONE YOU DON’T SEE THAT GETS YOU’ MAY BECOME AS MUCH OF A HEADACHE TO THE SOFTWARE PROGRAMMER AS FOR THE PILOT FACING A ROBOTIC OPPONENT

An aircraft that used its limited on-board sensors but, benefitting from a datalink, had a limited task and was not worried about its own survival is surely the next step for such a programme. However, arguably the AI shown in this test lacks some of the capabilities being developed for the USAF’s Golden Horde and similar swarming munitions programmes that ignore the immediate route to target in favour of a calculated, risk-minimising approach that ensures one of their number survives.

During the dogfight AI prioritised ‘the moment’ and its own endeavours rather than a wider mission. This is not to say that a developed version would not be more altruistic but, in terms of combat operations, such behaviour is not necessarily that helpful. For the AI of a single missile, possibly. For a broadside of several missiles, less so. For a swarm of various AI-controlled aircraft engaged in a complex task, pretty much the opposite approach is needed. But, to reiterate, that was neither the object of the test nor the lesson that should be drawn from the specific iteration of the AI in question.

Computer beats human in F-16: Round 4 – Act

Where, then, might this go? At present there is no shortage of aerospace AI projects. The more sensible assessments of the test have concluded that the medium-term future for programmes such as this are improved human-machine interface (HMI) and improved autonomous vehicles. In the case of the former, an advanced version of the software tested could be invaluable in an aerial encounter by suggesting likely counter-efforts against an opponent, based on information obtained over the course of a conflict.

Thus, it advises/reminds a pilot that an air defence radar tends to have poor radar coverage at a specific range or that a certain opposing fighter-radar combination is vulnerable to ‘notching’ (manoeuvring to reduce their radar lock) at this specific point. Even within the visual arena – which will include missiles – an AI that ‘gets’ BFM can trigger countermeasures or pilot alerts as it watches the rear hemisphere, calculating relative energy states and position of friendly and hostile aircraft. As a piece of software this could (though possibly with difficulty) be added to legacy aircraft, such as as the USAF’s new F-15EXs that will supposedly receive an improved and AI-equipped defensive aides suite (DAS) that learns, acts and advises as much as simply alerts.

The other obvious areas is the now familiar Loyal Wingman. The chances of an operational AI fighter engaging in actual BFM before 2040 – let alone 2030 – remains pretty low but the rapid progress of Boeing Australia’s ATS suggests that more basic missions are on the horizon. The USAF has shown an interest in air-to-air armed UCAVs to protect aircraft, such as their AEW&C platforms. Such a creature would not necessarily dogfight but would have to manoeuvre so as to maximise its chances of defeating any opposition.

But again with the shortcomings

But again with the shortcomings

A wider problem, though, remains unaddressed by this test and possibly by other efforts currently under way. AI will relieve aircrew of some tasks and improve their efficiency in others. However, as noted above, warfare is about deception, about forcing an error, about knowing the correct second to deliver a punch. Unlike BFM, plotting a course around enemy radars or protecting a crewed aircraft, these deeper techniques delve into psychology. History is replete with victories and defeats that were based on deliberate ruse or accidental misinterpretation, with greater or lesser impact. AI will help remove some uncertainty and/ or assist with penetrating the fog of war. However, unless it is equipped with a supreme ability to learn and adapt, there is the serious possibility that the AI itself becomes a weakness.

Under far less stressful circumstances, there has been a spate of incidents or near misses with civil aircraft where crews misused, failed to comprehend or ignored what their computers were saying. Within the dynamic and uncertain environment of warfare – with few real playbooks or libraries – the benefits of AI for combat aircraft may be undone by the fact that they themselves become victims of a clever, unconventional human (or humanAI team) opponent that ignores their OODA (observe, orient, decide, act) loop. Crews relying on the AI – possible unaware of it being misled – become highly vulnerable. To use a simplified example, a crew unaware that its GPS was being jammed or distorted could easily be fooled into a serious error because they were used to relying on said system as accurate. That is possibly the tip of the AI-befuddlement iceberg and the more integrated the AI becomes, arguably the more catastrophic its impediment might become.

Clearly, the ‘I’ in ‘AI’ is supposed to prevent this occurring but the promises of new hardware and software rarely match all expectations. Concurrently, virtually no new defence process, system or weapon has remained dominant for long. There is always a counter and some of the most difficult are those not understood until a confrontation has actually begun. The last decade has seen a variety of state and nonstate actors circumvent top-level software security. It is not difficult to see an opponent understanding how the AI perceives the world and adjusting either technology or doctrine accordingly. That is to be expected but it should be a further question over what this test – and other ‘AI’-based weapons – demonstrated and what the short-term progress can actually deliver. ‘It’s the one you don’t see that gets you’ may become as much of a headache to the software programmer as for the pilot facing a robotic opponent.

The future contested EW battlespace and the requirement not to overload human pilots controlling ‘Loyal Wingman’ will drive increasing autonomy in systems like the UK’s LANCA. MoD/Crown copyright

The future contested EW battlespace and the requirement not to overload human pilots controlling ‘Loyal Wingman’ will drive increasing autonomy in systems like the UK’s LANCA. MoD/Crown copyright A USAF F-16.

A USAF F-16.